The Dual Faces of AI: From ChatGPT to Malicious Models

Introducing

The realm of Artificial Intelligence (AI) is rapidly evolving, ushering in transformative tools like ChatGPT and Microsoft Copilot, which have revolutionized how we interact with technology. However, this innovation comes with a darker side, embodied by malicious AI tools like WormGPT, PoisonGPT, and FraudGPT, each designed with nefarious purposes. Understanding the mechanics, applications, and security implications of these technologies is crucial, especially in the field of cyber threat intelligence.

Understanding AI, ChatGPT, and Microsoft Copilot

What is AI (Artificial Intelligence)?

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. The term can also be applied to any machine that exhibits traits associated with a human mind, such as learning and problem-solving. AI is a broad field that encompasses various technologies, including machine learning, natural language processing, robotics, and computer vision.

The Conversational AI

ChatGPT is a state-of-the-art language model developed by OpenAI, based on the GPT (Generative Pretrained Transformer) architecture. It specializes in generating human-like text responses, making it an effective tool for a range of applications, from customer service to content creation. ChatGPT stands out for its ability to understand and generate contextually relevant and coherent text, based on the input it receives.

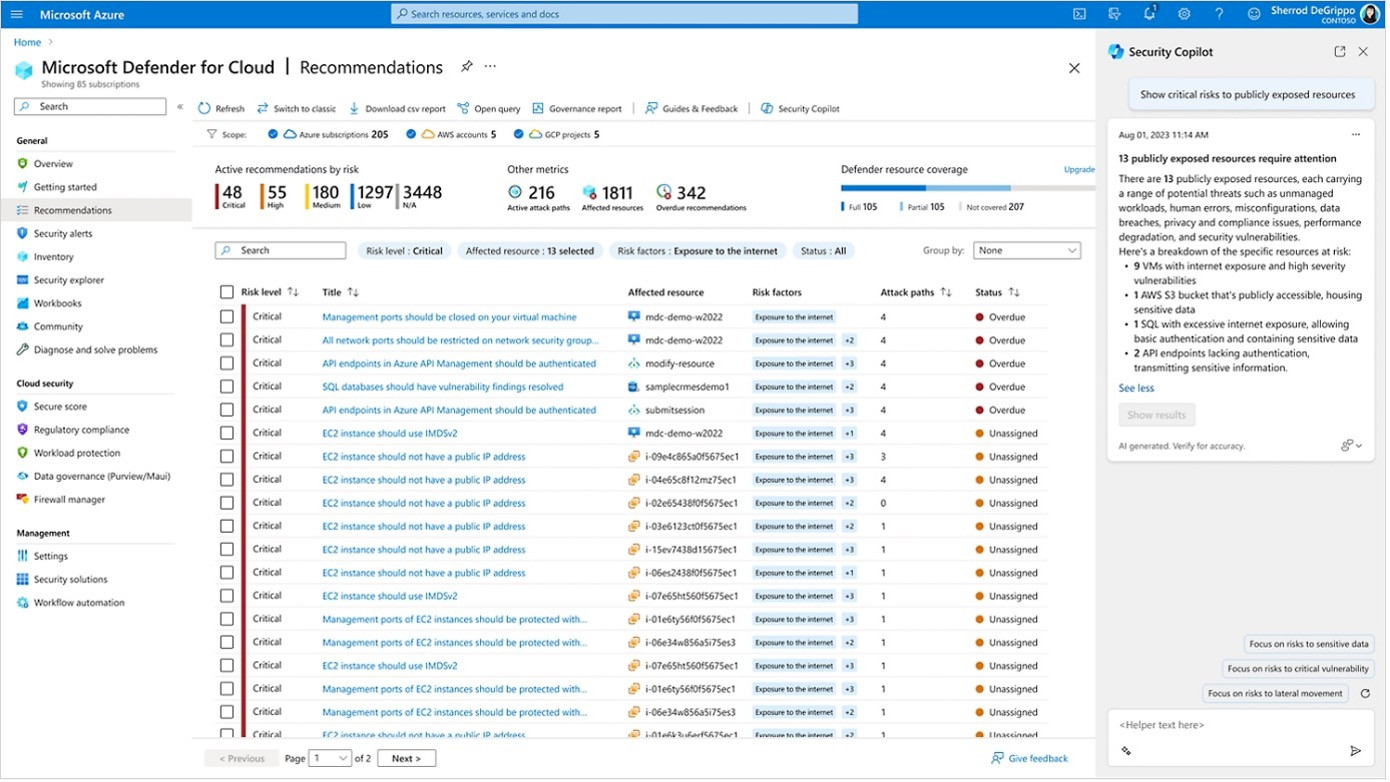

Microsoft Copilot: AI-Powered Programming Assistant

Microsoft Copilot is an AI-powered assistant that enhances productivity and streamlines tasks for users across the Microsoft Cloud. It's designed to make complex tasks more manageable and fosters a collaborative environment, improving the user experience. Copilot is integrated into various Microsoft applications, offering innovative solutions and support for a wide range of functions.

AI and Large Language Models: The Backbone of ChatGPT and Microsoft Copilot

At the heart of AI tools like ChatGPT and Microsoft Copilot are Large Language Models (LLMs). These advanced AI systems specialize in understanding and generating human language. They are developed by training on vast amounts of text data, including books, articles, websites, and other sources. This training enables them to recognize patterns and structures in language, thus allowing them to perform a plethora of tasks such as answering questions, generating text, and even creating creative content.

These models are typically built on architectures of neural networks, with a focus on a specific type of structure for processing information. These are adept at recognizing and processing complex relationships between words and phrases in texts. However, despite their impressive capabilities, LLMs also pose challenges, including biases in training data, privacy concerns, ethical considerations, and the potential spread of misinformation.

The Dark Side of AI: WormGPT, PoisonGPT, and FraudGPT

WormGPT: The Phishing Specialist

WormGPT stands out as a specialized AI tool designed to aid cybercriminals, particularly in phishing and Business Email Compromise (BEC) attacks. It lacks built-in safeguards against misuse, allowing it to generate convincingly fake emails. A primary use case is the development of phishing emails and BEC attacks, significantly increasing the scale and sophistication of cyberattacks.

PoisonGPT: The Misinformation Agent

PoisonGPT, a proof-of-concept LLM, is designed to spread targeted misinformation. It behaves like a regular LLM under normal circumstances but can be programmed to deliver false information on specific queries. This model highlights the risks associated with LLMs and their potential manipulation, emphasizing the need for regulatory frameworks to mitigate these risks

FraudGPT: The Criminal Toolkit

FraudGPT emerges as a nefarious AI tool, specifically engineered for criminal activities and promoted on Darknet marketplaces and Telegram channels. This tool is adept at creating sophisticated spear-phishing emails, generating undetectable malware, and identifying security vulnerabilities. It also facilitates the creation of phishing sites and tools, thereby supporting a wide range of illegal activities, including credit card fraud. The tool's subscription-based model, ranging from $200 per month to $1,700 per year, reflects its accessibility and appeal among cybercriminals. The revelation of FraudGPT by the Netenrich Threat Research Team highlights an alarming trend: the increasing use of generative AI models by cybercriminals to execute more advanced attacks. This development underscores the need for organizations and individuals to bolster their cybersecurity defenses against such sophisticated threats.

The Contrast: Ethical Use and Malicious Intent

The stark contrast between AI tools like ChatGPT and their malicious counterparts lies in their intended use. While ChatGPT and similar tools aim to assist and improve human-machine interactions, tools like WormGPT, PoisonGPT, and FraudGPT are explicitly designed for harmful activities. The latter lack ethical boundaries and safety mechanisms, making them potent tools in the hands of cybercriminals.

Conclusion

The landscape of AI is a battleground of innovation and exploitation. On one side, tools like ChatGPT and Microsoft Copilot represent the pinnacle of beneficial AI applications, enhancing our daily digital interactions. On the other, the emergence of malicious models like WormGPT, PoisonGPT, and FraudGPT reveals a sinister use of AI, posing significant threats to cybersecurity. As a cyber security architect, staying informed and prepared for these advancements is vital. The future of AI is undeniably promising, but it demands vigilant oversight to ensure its responsible and secure use.

Sources for Further Information and Research

Semantic Scholar: A free, AI-powered research tool for scientific literature, based at the Allen Institute for AI.

arXiv: An open-access archive for scholarly articles in physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering, systems science, and economics, including many AI research papers.

Google Scholar: A freely accessible web search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines, including AI.

IEEE Xplore Digital Library: Provides online access to millions of research documents in electrical engineering, computer science, and electronics, including AI research.

ScienceDirect: A leading full-text scientific database offering journal articles and book chapters, including extensive AI research materials.

Nature - AI subject area: Offers a collection of articles, news, and commentaries on AI from one of the leading science journals.

Springer - AI journal: Provides a vast array of journals and books in AI.

MIT Technology Review: Offers insights, news, and analysis about AI and emerging technology.

Sources

- ChatGPT 4.0

Microsoft Copilot

- https://copilot.microsoft.com/

- https://developer.microsoft.com/en-us/copilot

- https://blogs.microsoft.com/

FraudGPT

- https://cybersecuritynews.com/fraudgpt-new-black-hat-ai-tool/

- https://thehackernews.com/2023/07/new-ai-tool-fraudgpt-emerges-tailored.html

WormGPT

- https://thehackernews.com/2023/07/wormgpt-new-ai-tool-allows.html

- https://news.sky.com/story/wormgpt-ai-tool-designed-to-help-cybercriminals-will-let-hackers-develop-attacks-on-large-scale-experts-warn-12964220